Hand Gesture Recognition for an Off-the-Shelf Radar by Electromagnetic Modeling and Inversion

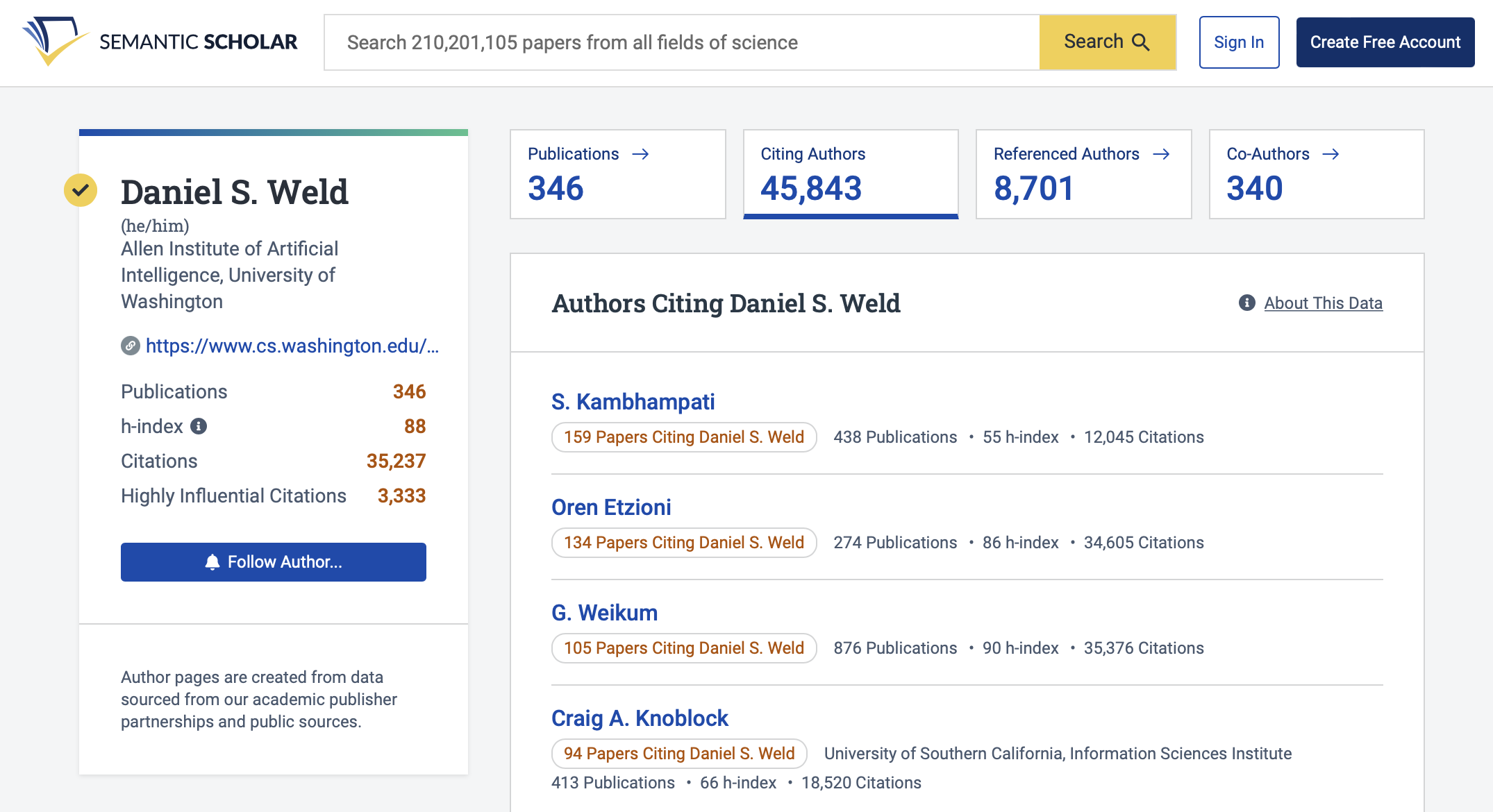

Microwave radar sensors in human-computer interactions have several advantages compared to wearable and image-based sensors, such as privacy preservation, high reliability regardless of the ambient and lighting conditions, and larger field of view. However, the raw signals produced by such radars are high-dimension and relatively complex to interpret. Advanced data processing, including machine learning techniques, is therefore necessary for gesture recognition. While these approaches can reach high gesture recognition accuracy, using artificial neural networks requires a significant amount of gesture templates for training and calibration is radar-specific. To address these challenges, we present a novel data processing pipeline for hand gesture recognition that combines advanced full-wave electromagnetic modelling and inversion with machine learning. In particular, the physical model accounts for the radar source, radar antennas, radar-target interactions and target itself, i.e.,, the hand in our case. To make this processing feasible, the hand is emulated by an equivalent infinite planar reflector, for which analytical Green’s functions exist. The apparent dielectric permittivity, which depends on the hand size, electric properties, and orientation, determines the wave reflection amplitude based on the distance from the hand to the radar. Through full-wave inversion of the radar data, the physical distance as well as this apparent permittivity are retrieved, thereby reducing by several orders of magnitude the dimension of the radar dataset, while keeping the essential information. Finally, the estimated distance and apparent permittivity as a function of gesture time are used to train the machine learning algorithm for gesture recognition. This physically-based dimension reduction enables the use of simple gesture recognition algorithms, such as template-matching recognizers, that can be trained in real time and provide competitive accuracy with only a few samples. We evaluate significant stages of our pipeline on a dataset of 16 gesture classes, with 5 templates per class, recorded with the Walabot, a lightweight, off-the-shelf array radar. We also compare these results with an ultra wideband radar made of a single horn antenna and lightweight vector network analyzer, and a Leap Motion Controller.

TLDR

A novel data processing pipeline for hand gesture recognition that combines advanced full-wave electromagnetic modelling and inversion with machine learning is presented that enables the use of simple gesture recognition algorithms, such as template-matching recognizers, that can be trained in real time and provide competitive accuracy with only a few samples.