Locate the right papers, and spend your time reading what matters to you.

The TLDR feature from Semantic Scholar puts automatically generated single-sentence paper summaries right on the search results page

What Are TLDRs?

TLDRs (Too Long; Didn't Read) are super-short summaries of the main objective and results of a scientific paper generated using expert background knowledge and the latest GPT-3 style NLP techniques. This new feature is available in beta for nearly 60 million papers in computer science, biology, and medicine.

Staying up to date with scientific literature is an important part of any researchers’ workflow, and parsing a long list of papers from various sources by reading paper abstracts is time-consuming.

TLDRs help users make quick informed decisions about which papers are relevant, and where to invest the time in further reading. TLDRs also provide ready-made paper summaries for explaining the work in various contexts, such as sharing a paper on social media.

TLDRs are now available in the Semantic Scholar API. You can reference the documentation, or visit our API page for more information.

What People Are Saying

"Information overload is a top problem facing scientists. Semantic Scholar's automatically generated TLDRs help researchers quickly decide which papers to add to their reading list."

Isabel Cachola

Johns Hopkins University PhD Student, Former Pre-Doctoral Young Investigator at AI2, and Author of TLDR: Extreme Summarization of Scientific Documents

"People often ask why are TLDRs better than abstracts, but the two serve completely different purposes. Since TLDRs are 20 words instead of 200, they are much faster to skim."

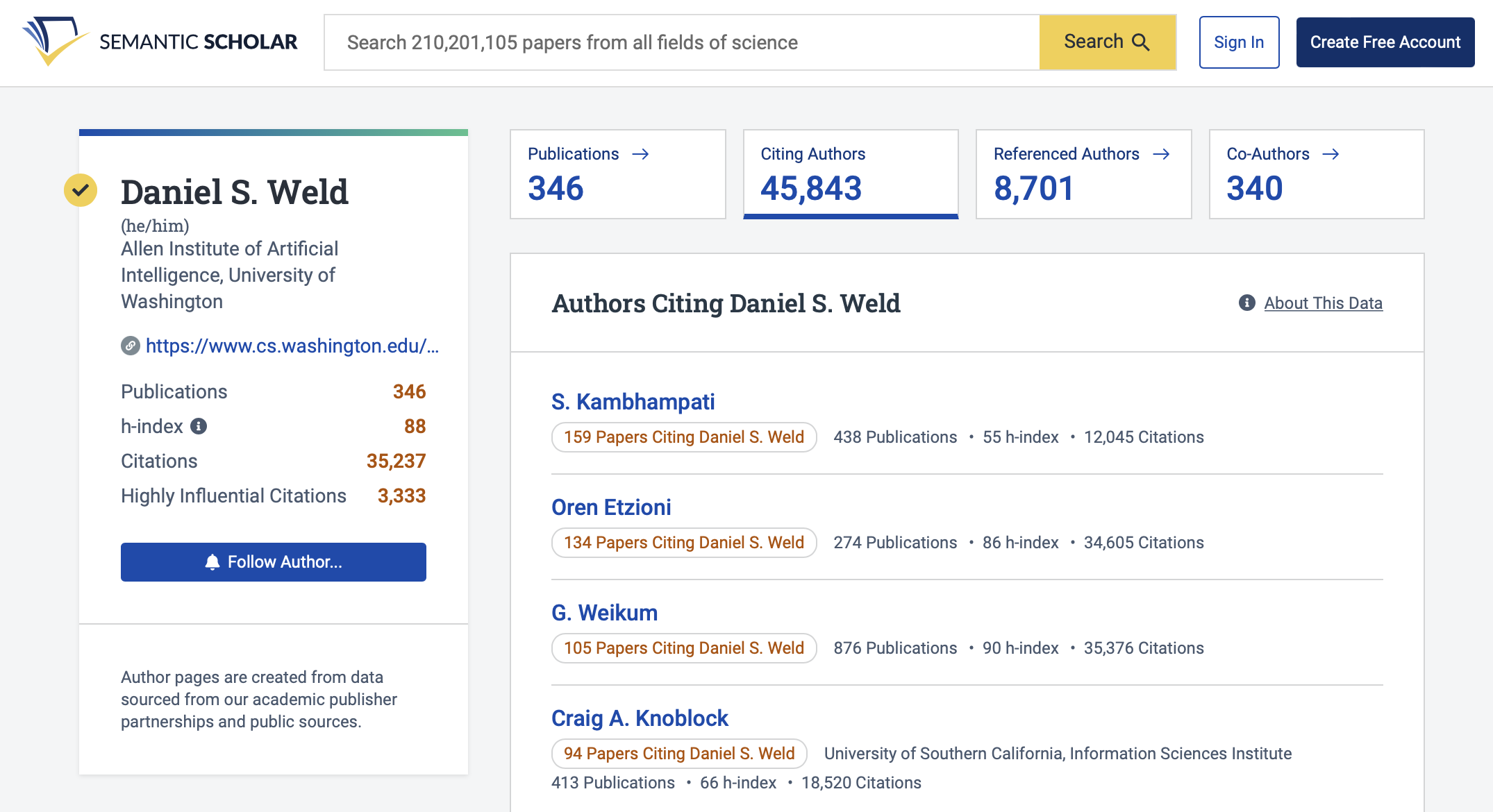

Daniel S. Weld

General Manager, Semantic Scholar, Author of TLDR: Extreme Summarization of Scientific Documents

"This is one of the most exciting applications I have seen in recent years! Not only are TLDRs useful for navigating through papers quickly, they also hold great potential for human-centered AI."

Mirella Lapata

AI2 Scientific Advisory Board Member, Professor in the School of Informatics at the University of Edinburgh

Computer Science Examples

TLDR This work introduces SCITLDR, a new multi-target dataset of 5.4K TLDRs over 3.2K papers, and proposes CATTS, a simple yet effective learning strategy for generatingTLDRs that exploits titles as an auxiliary training signal.

TLDRWe propose a state-of-the-art pipelined method for training neural paragraph-level question answering models on document QA data.

Biomedicine Examples

TLDRAlthough the outcomes of the secondary end points and predefined subgroup analyses suggest an advantage of the neoadjuvant approach, additional evidence is required.

TLDRIt is observed that the oral microbiome variances were shaped primarily by the environment when compared to host genetics, and this has the potential to reveal novel host-microbial biomarkers, pathways, and targets important to effective preventive measures, and early disease control in children.

To learn more about the research powering TLDRs, read the paper TLDR: Extreme Summarization of Scientific Documents from authors Isabel Cachola, Kyle Lo, Arman Cohan, and Daniel S. Weld from the Semantic Scholar team at AI2.

Send your feedback about TLDRs on Semantic Scholar to: feedback@semanticscholar.org.